Prototyping With Neural Networks

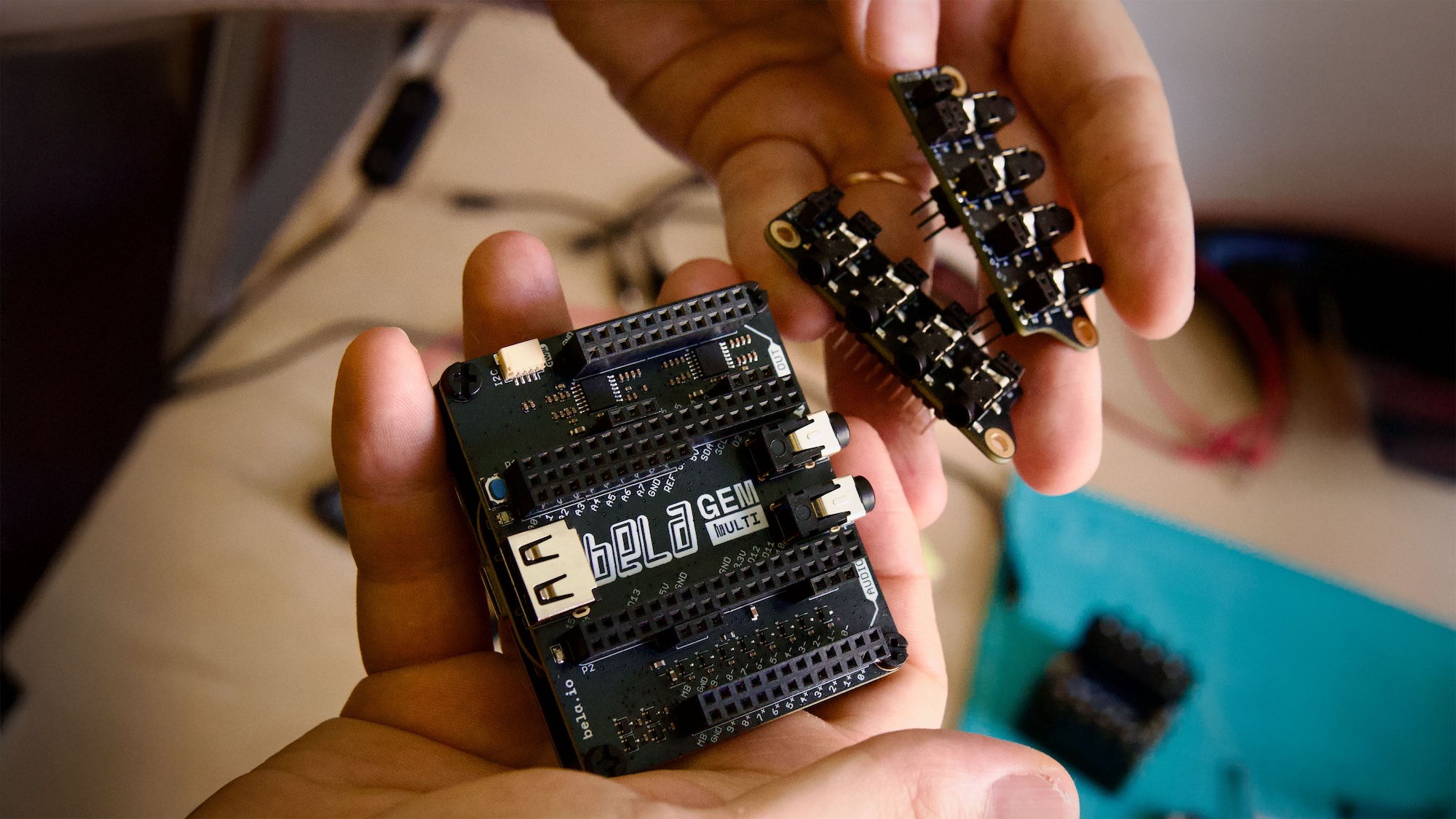

Running AI models with Bela Gem

There’s growing excitement around running neural networks on embedded systems. As software libraries become more sophisticated and embedded computers more powerful, real-time machine learning on small devices is becoming more practical. Bela Gem offers a compelling environment for working with embedded AI thanks to its power, connectivity and ease of development.

Bela Gem is based on a quad-core 1.4 GHz processor and runs Linux, so it offers much more computational power, memory, and storage than any microcontroller. It gives you access to embedded AI libraries like libtorch, TFLite (now LiteRT) or RTNeural, and its onboard audio, analog-to-digital converters, and digital I/O make it ideal for sensing and real-time sound. All this makes Bela Gem a powerful platform for running small-to-medium AI models on sensor or audio signals, without the need for extra hardware or complicated toolchains.

Bela’s IDE gets you straight into writing code, and our C++ API makes it easy to make use of all four CPU cores.

But there’s another crucial part of embedded AI that Bela Gem can help with: data. Collecting data, training a model, and deploying it back to an embedded system can be a slow and awkward process. That’s where Teresa Pelinski’s research comes in.

Introducing pybela: Smarter AI Workflows for Bela

Teresa Pelinski is a final-year PhD student at the Augmented Instruments Lab at Queen Mary University of London. Her PhD is the first to be sponsored by Bela, and as part of her research she has been exploring how neural networks can support creative practice on embedded systems.

During an initial internship with Bela in 2023, Teresa began developing pybela, a Python library that allows real-time communication between a C++ Bela program and a Python script or Jupyter notebook. This makes it easy to send and receive data while your Bela code is running, very useful for machine learning pipelines, as well as for any data-driven experiments and real-time performance.

pybela encourages a different kind of AI practice. Instead of large black-boxed models trained on massive datasets, it supports a more experimental, embodied approach: working with small, custom datasets and creatively engaging with the constraints of embedded hardware. This plays to Bela’s strengths of design for low-latency and real-time interaction.

Learn about pybela and embedded AI

In this video, Teresa introduces the pybela project and explains how it enables faster prototyping and new creative workflows for embedded AI.

Learn more about Teresa’s research on the Bela Blog and her paper pybela: a Python library to interface scientific and physical computing.