Bela's first PhD student

Interview with Teresa Pelinski about AI, embedded computing and musical instruments

In this post we have the great pleasure of introducing Teresa Pelinski, the first ever PhD student to be sponsored by us at Bela! She will be completing a 4 year fully funded PhD as part of the Artificial Intelligence and Music Doctoral Programme at Queen Mary University of London. At the end of her first year of her studies we thought this was a good moment to do an interview and find out more about her background and research.

Considering AI for musical instruments

We’re very excited that you are the first ever Bela-sponsored PhD candidate – welcome to the team and to the lab! To begin with, can you introduce yourself and tell us a bit about your experience before starting at Queen Mary?

I’m very excited too! And really thankful for the opportunity of doing my PhD with Bela and the Augmented Instruments Lab. I’m from Spain; I grew up in Girona and Madrid. I did my BSc in Physics at the Universidad Autónoma de Madrid, where I became very interested in the acoustics of musical instruments. Since there weren’t any modules related to sound in my department, I spent two semesters abroad at the RWTH Aachen (Germany), studying acoustics and writing my bachelor thesis at the Institute of Technical Acoustics. Even though problem-solving in physics is definitely a creative task, I often felt like I could not relate my studies to the creative things I was doing on the side (playing instruments, drawing and writing). In my opinion, the physics degree also lacked a bit in critical thought on science and technology more broadly.

The Reactable synthesizer by Jordà, Kaltenbrunner, Geiger and Alonso.

In the meantime, I enjoyed learning to code, so I was very happy to find out about the Sound and Music Computing MSc at the Universitat Pompeu Fabra. There I learnt about the work of Sergi Jordà on digital lutherie, who became my masters’ thesis supervisor. The image above is of the Reactable, one of the most famous instruments from that department which has been on tour with Björk and many other musicians. I thought and still think that digital lutherie is a very particular combination of technology and arts that lets me explore many fields I am very interested in, such as design, human-computer interaction,s and musicology. That’s what motivated me to apply for the Artificial Intelligence and Music PhD programme at Queen Mary.

What are your impressions of London after the 1st year?

It was hard at first, and it took me a few months to adapt to the prices, especially to rent and eating/drinking-out prices. Without sounding too harsh, living in London has really given me perspective and made me appreciate life quality in Spain! That being said, gigs are not too expensive (especially on student discounts), and there’s always something interesting going on. A very cool gig I’ve seen is the London Contemporary Orchestra playing the Disintegration Loops by William Basinski. An orchestra playing notated tape loops… It’s very exciting to be able to attend gigs that make me think about things I read on instrumental and musical practice for my thesis.

What led you to the world of digital music in the first place?

I’ve always been fond of digital technologies, I got my first computer when I was 5 (which now sounds crazy, but at the time, the internet didn’t seem as scary as it does today!). I think my interest in digital music kicked off when I found out about Fourier transforms and series in the last years of high school. I became obsessed with the idea of being able to describe sounds mathematically; it felt like pure magic. This was what pushed me towards studying physics, and acoustics, and later, sound computing.

Are you a musician yourself?

Not really, to be honest. I played cello as a kid and electric guitar and piano as a teenager, but it’s been many years since I practised regularly. I also had some cameos as DJ while I was studying in Germany in 2019. Last year I also did an artistic residency at the Phonos Foundation with a sound art piece with AI-generated voices. Whether that’s music or not is up to the listener to decide…

Can you tell us a bit about your PhD topic?

My project is about making digital instruments more sensitive to the subtlety and nuance of a musical gesture. I think it’s about finding the sweet spot between having lots of gestural possibilities for playing an instrument, and having full control of your instrument so that you can play with intentionality. There are many ways of mapping gesture and sound, and you always need to make decisions about how you play an instrument, and hence the music which can be made with it. You might link many gestural dimensions to many sound parameters, and the instrument might be great to experiment with, but it will be very hard to control and to play the same piece twice. Or you might link many gestural dimensions to only a few sound parameters, and your instrument will be very subtle, but the music you can make with it will always sound quite similar. My research tries to introduce AI to the mapping from gesture to sound in order to find a balance between subtle control and sonic flexibility and variety.

You ran a workshop at NIME 2022, can you tell us a bit about how it went?

It went great! I co-organised it with other members of the Augmented Instruments Lab, the Intelligent Instruments Lab (Reykjavík) and the Emute Lab (Sussex). The workshop was on embedded AI for new interfaces for musical expression. This means, in short, figuring out how to run AI techniques that are usually computationally expensive on embedded computers such as Bela, which have much more limited computational resources - and all of this in the context of digital musical instruments! It was very interesting to hear about what other researchers are doing, especially because there is currently not much published on the topic. All the presentations and discussion are on the Instruments Lab YouTube channel and you can find part 1 below:

What’s next for your research?

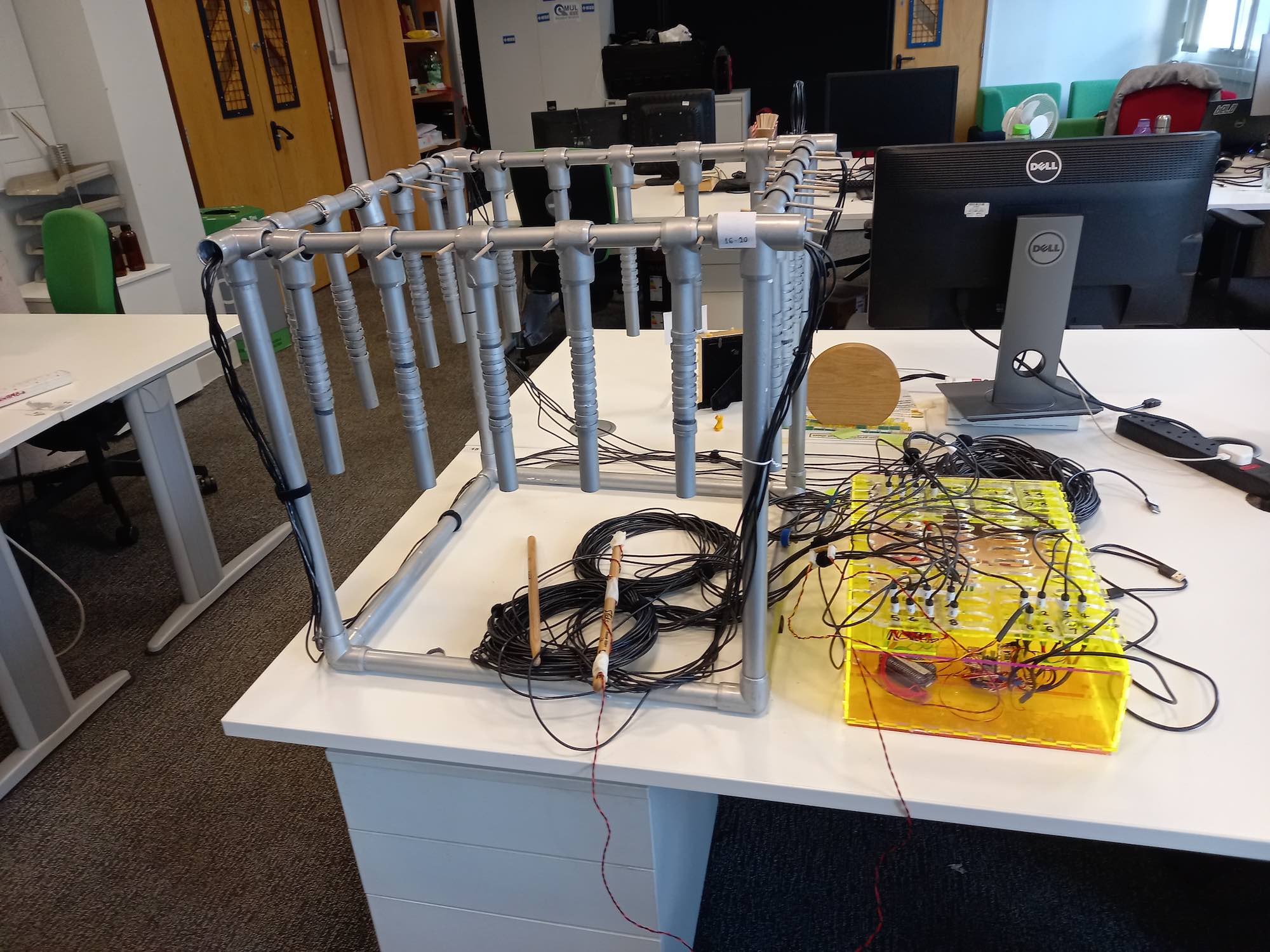

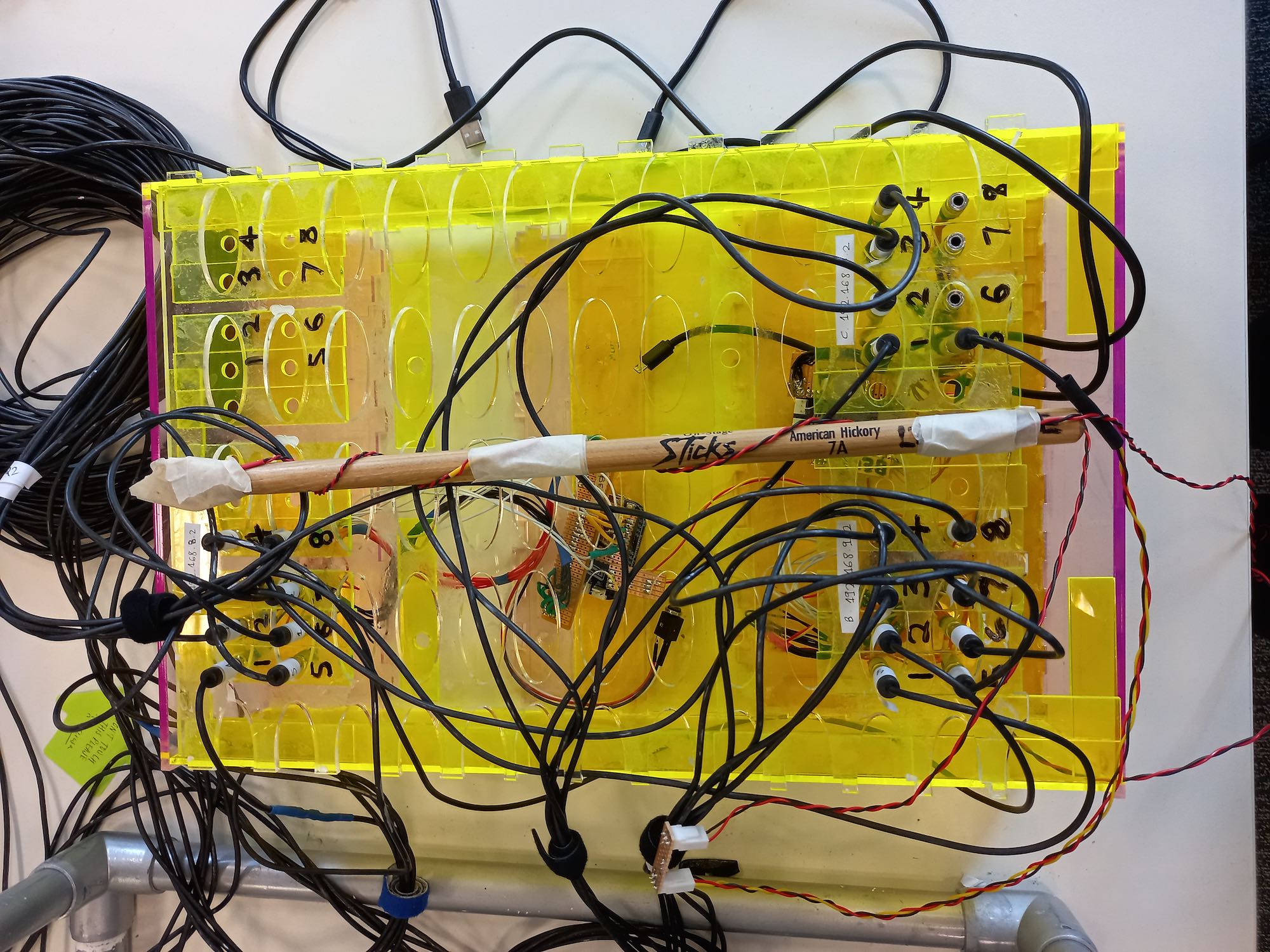

I’m currently working on a version of the Chaos Bells, a large instrument by Lia Mice. The Chaos Bells has 20 pendulums, each with an accelerometer measuring the angle at which the pendulums are bent when the performer interacts with them. I am recording a dataset of signals from these accelerometers, and with these signals, I am going to train a model that will be able to predict how a sensor signal is going to behave given only the first milliseconds of that signal. With this, I would like to build an instrument that only sounds when it’s performed in unexpected ways.

A scaled down version of the Chaos Bells by Lia Mice which Teresa is using a test bed in her research.

Are there any aspects of your research which you would like to get community feedback on?

My research is still in the early stages, so I haven’t shared anything with the community yet. I will share the tools I will use to deploy the deep learning models on Bela, which I hope will be useful to the community. My colleague Rodrigo Díaz at the Centre for Digital Music has made significant efforts in this direction, and I plan to continue with this and prepare some accessible examples that might help whoever is interested in getting started with deep learning on Bela. It would be great to get some feedback on those - but as said, there’s still some work to be done before getting there!

To finish let’s zoom out to the bigger picture. Where do you see music technology going in the next decade?

This is a very difficult question. I can see how current research on production tools will get integrated into production workflows. For example, I think there are very strong cases for automatic mixing or sample collection exploration. However, in the case of musical instruments, it is difficult to tell because there’s not really a problem to solve: we already make music with the instruments we have, and we make it very well. Moreover, as my PhD supervisor Andrew McPherson says, many musicians are reluctant to adopt new instruments, and this is understandable for many reasons: there’s repertoire, established techniques and pedagogical practices for their instruments, which is not the case in new digital musical instruments.

Xenia Pestova Bennett playing the Magnetic Resonator Piano created by Andrew McPherson who heads the Augmented Instruments Lab.

In this sense, I don’t see new instruments getting broadly adopted. However, I hope that using AI for coupling gesture and sound in musical instruments will give way to a new generation of musical instruments, and that we will see musical gesture gaining relevance in live electronic music performance.

Thank you Teresa, we’ll keep updates coming as the research progresses!