Teaching Spotlight

Music and Audio Programming at Queen Mary University of London

Music and Audio Programming is a master’s level course taught by Bela inventor Professor Andrew McPherson at our spiritual home, Queen Mary University of London. The full materials for this course are available for free online. In this post we review some of the excellent student projects submitted this year.

Modular image by Nina Richards, wikimedia (CC-BY 3.0)

Training the instrument inventors of the future

Bela was born 5 years ago in the Augmented Instruments Laboratory at Queen Mary University of London, and one of its earliest applications was as a learning platform for a masters-level course now called Music and Audio Programming. Last year we turned this course into an online set of lectures which are freely available on Youtube alongside examples and slides in the course repository. At Queen Mary the course is taught in person and with supplimentary materials and supervision on the following degree programmes:

- MSc in Sound and Music Computing, a one-year Master’s programme

- PhD in Artificial Intelligence and Music, a 4-year PhD programme with fully-funded studentships for UK and international students

For a taster of what the course is like check out the introductory class here. You can find the rest of the lectures on our Youtube channel and there are more special classes coming soon so be sure to subscribe.

You can also find this course as part of HackadayU, an alternative grad school for hardware hackers created by Hackaday.

What’s covered in the course?

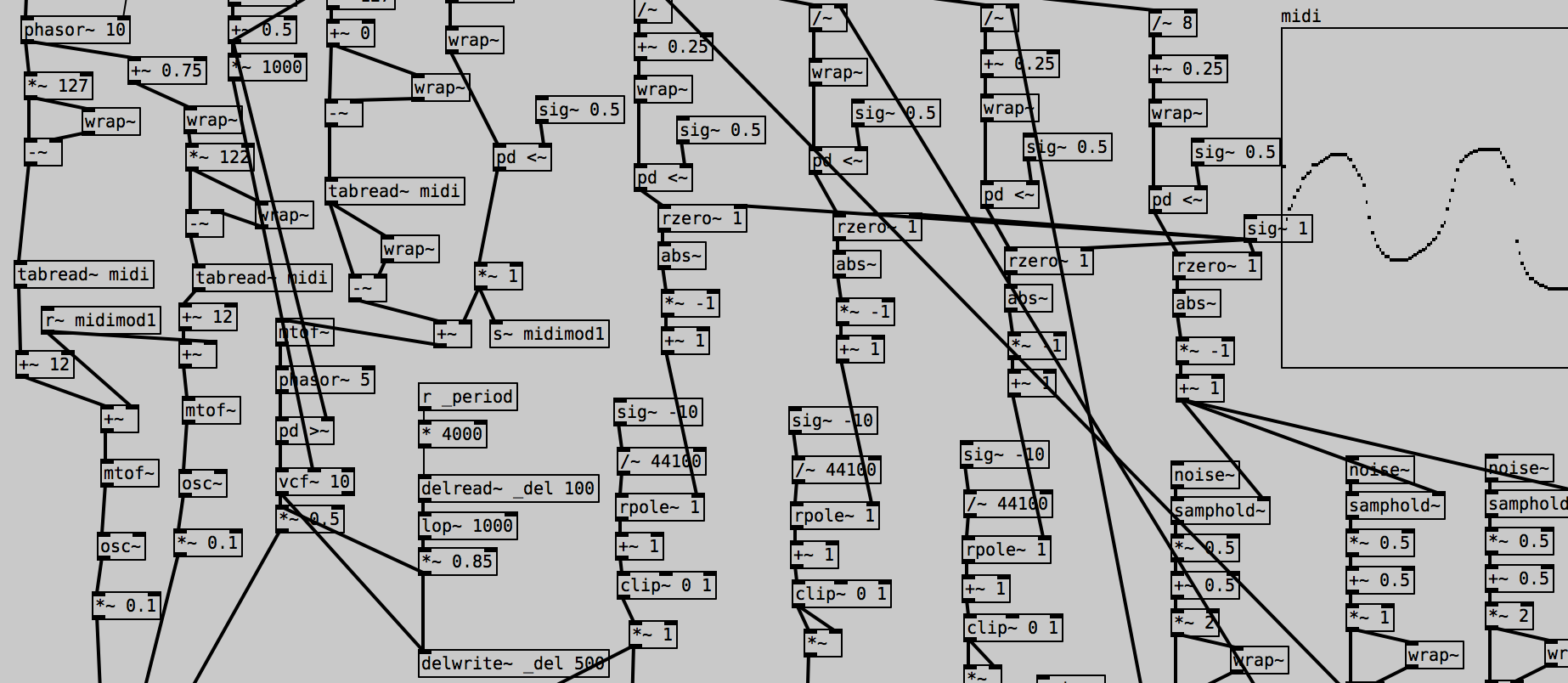

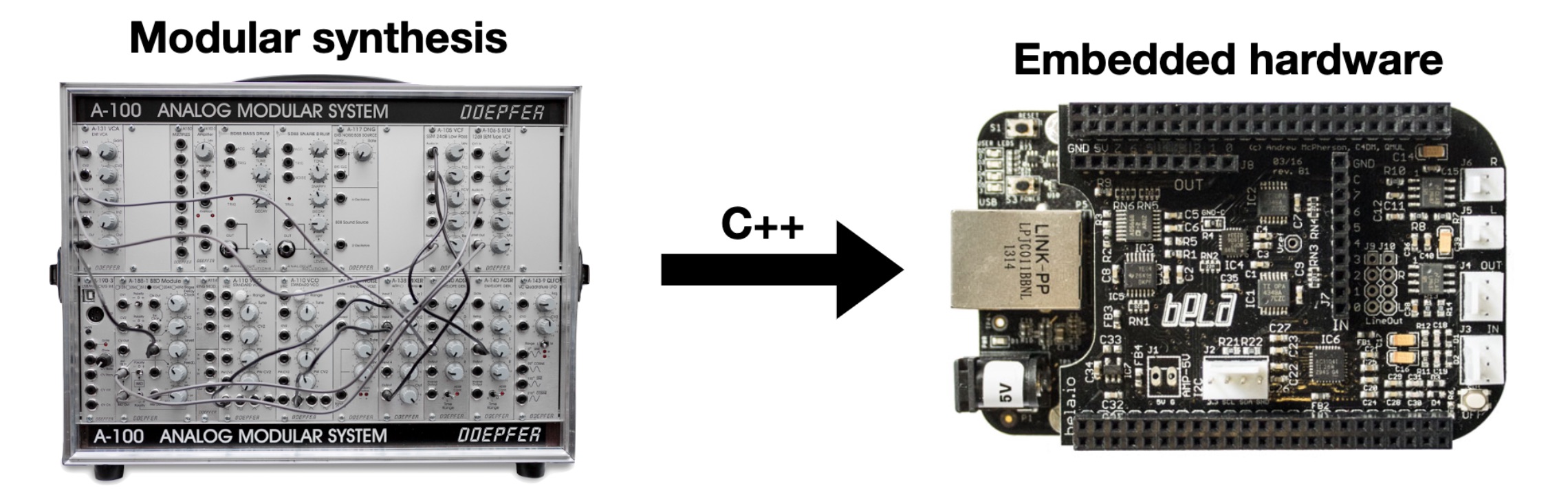

Music and Audio Programming is a deep dive in the theory of how Bela processes data in real time as well as a practical guide to building interactive systems involving sensors and sound. As well as serving as a broad introduction to c++ programming for audio, this course also teaches you how to create the basic building blocks of a modular synth, with a focus on their implementation in c++. In the later lectures we even dive into more advanced topics like Assembly programming and fixed point arithmetic for audio processing. See the below table for an overview of the topics covered:

| Programming topics | Music/audio topics |

|---|---|

| Working in Real-Time, Buffers and arrays, Parameter control | Oscillators, Samples, Wavetables |

| Classes and objects, Analog and digital I/O, Filtering | Filters, Control voltages, Gates and triggers |

| Circular buffers, Timing in real time, State machines | Delays and delay-based effects, Metronomes and clocks, Envelopes |

| MIDI, Block-based processing, Threads | ADSR, MIDI, Additive synthesis |

| Fixed point arithmetic, ARM assembly language | Phase vocoders, Impulse reverb |

Bela’s mission has always been to make high-performance computing accessible to all. This isn’t just a course for engineers - this is a course geared at people who have a bit of technical knowledge, and want to improve their c++ skills by learning how to implement practical projects involving audio and sensors using the Bela platform.

Final projects

Below we have a selection of just a few of the final student projects submitted this year. The quality of the submissions was extremely high even though due to the pandemic there was limited face-to-face tutorial time. Congratulations to all the students for their hard work and thanks for giving us the permission to share your projects. If you would like to get in touch with any of the students about their projects please email us at info(at)bela.io and we will put you in contact.

Open-source Autotune

Xavier Riley implemented the famous and ever-present Autotune algorithm which was first developed for the Antares plugin. Although this plugin is arguably one of the most successful and influential music applications it is still badly understood and there are no open-source implementations currently available. This is surprising given its widespread popularity in modern music and the fact that the main details of the implementation have been publicly documented in the (now expired) original patent. Xavier took it upon himself to implement a version which runs on Bela.

Real-time Schroeder Reverb

Madeline Ann Hamilton implemented a real-time reverberation effect running on Bela. This project’s central algorithm was the reverb algorithm invented by Manfred Schroeder in the 1962 seminal paper “Natural Sounding Artificial Reverberation”. The implementation sounds great and we hope to include this a default example on Bela in the future.

Vector Phaseshaping Monosynth

Eoin Roe implemented a full monophonic synthesiser with quasi-low pass filter, an arpeggiator, low frequency oscillators and other modulation sources. The synthesiser uses at its core Vector Phaseshaping Synthesis, which is an exciting synthesis technique developed at Aalto University School of Electrical Engineering in Helsinki, Finland. You can hear and see it in action in the video.

Electronic Tap Shoe

Louise Thorpe transformed a pair of shoes into an interactive musical interface. She placed a series of force sensitive resistors on the soles of a pair of shoes and used the signal from these sensors to trigger samples and apply effects in realtime to sound files as they played back. In the demo video Louis shows the different modes of operation.

Drum Controlled Cross-adaptive Audio Effects

Saurjya Sarkar created a cross-adaptive audio effect that is specifically tailored for drum input signals. The effects applied to a base audio track are altered depending on the quality of a second audio signal allowing for a variety of different dependent behaviours. In the demo video Saurjya shows the effect in action with a synth track being altered by a bass drum track controlling filtering effects and gating or ducking of the track amplitude.

“Scenequencer” for Launchpad Pro

Samuel Morgan created a performance-oriented multi-FX unit. The unit is controlled using a Launchpad Pro, with information transmitted to and from the user via the RGB pads. The key feature of this unit is that the user is able to create and move freely between various global configurations of effect parameters, known as ‘scenes’, and that these scenes may be sequenced in time to an input MIDI clock signal (transmitted to Bela over USB). check out the video for a full explanation.

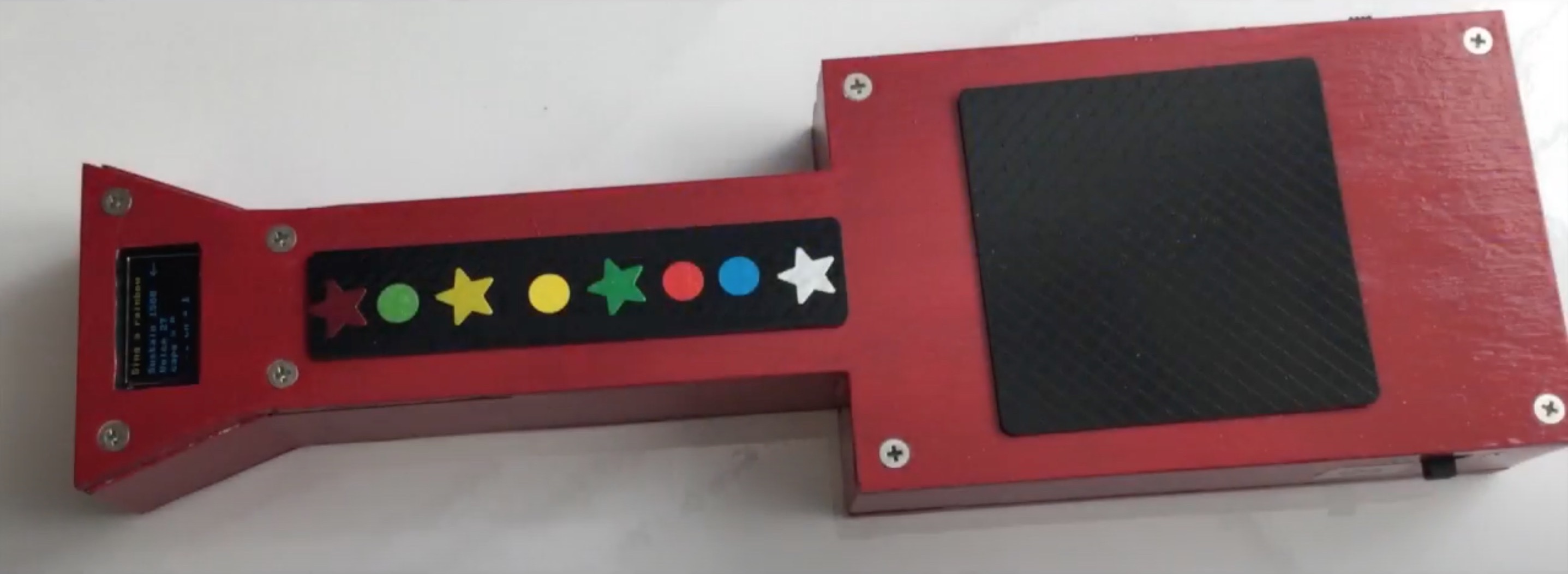

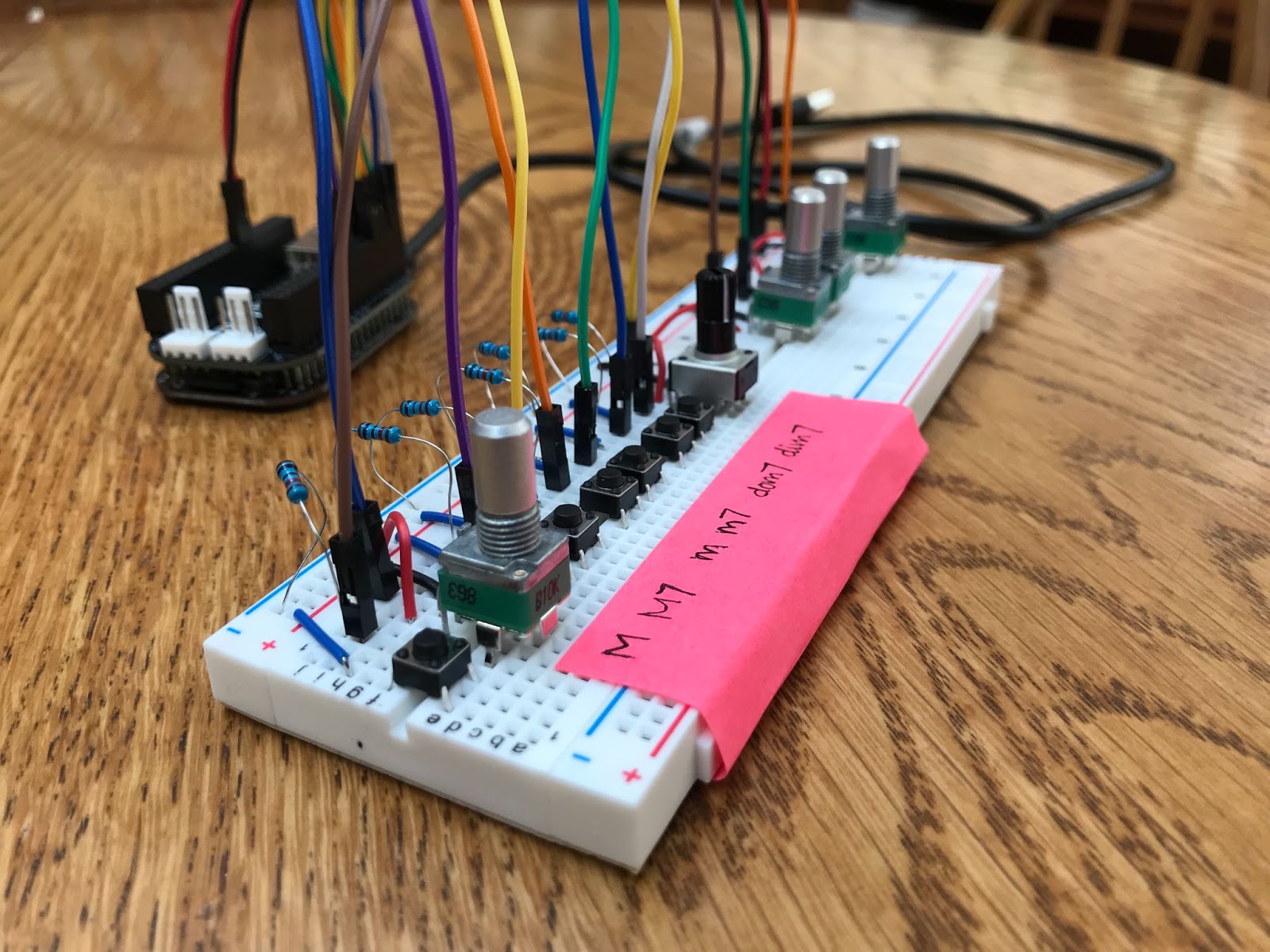

Drum Machine inspired by the Linn Drum

Tony Trup built a drum machine loosely based on the LinnDrum classic drum machine manufactured by Linn Electronics between 1982 and 1985 and featured on countless hit records in the 1980s. The drum machine includes variable tempo, quantisation to rhythmic subdivisions (8th, 16th or 32nd notes), the ability to apply a variable amount of ‘swing’ to each set of subdivisions individually, and variable loop length (up to 16 beats). Find out more about Tony’s work on his site.

Groove Box

James Bolt created a 7-voice polyphonic synth alongside a drum machine. Reverb and Compression effects were also added to the synthesizer to create a full groove box.

Zero-latency Convolution Reverb

Christian Steinmetz developed a zero-latency convolution reverb for his final project. Convolution has many applications in audio, such as equalisation and artificial reverberation. Unfortunately, performing convolution with large filters, as is common in artificial reverberation, can be computationally complex. Block-based convolution that takes advantage of the FFT, performing filtering in the frequency domain, can significantly accelerate this operation, but comes at the cost of higher latency. To balance these forces, so-called ‘zero-latency’ convolution has been proposed, which breaks up the filter so that the early part is implemented with the direct form and later blocks are implemented with the FFT.

Teaching with Bela

If you are interested in learning more about Bela in the classroom check out our Teaching Spotlight Series to see how universities, art colleges and conservatoires are using Bela in their teaching all around the world. You can also find extensive teaching materials in the tutorial section of learn.bela.io. Don’t hesitate to get in touch with us as we’re always happy to hear from people using our tools and we also offer educational discounts.