Teaching Spotlight

Masters in Music, Communication & Technology across two universities in Norway

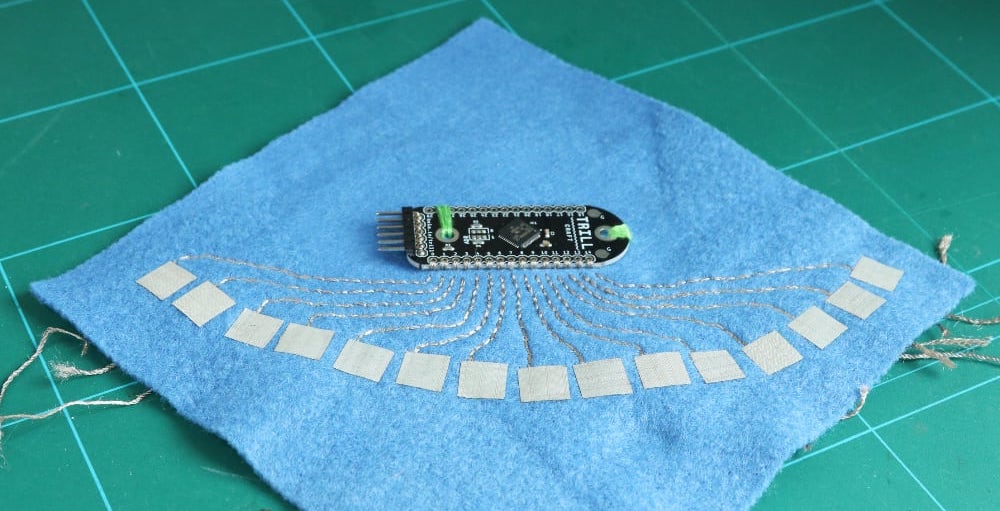

The Music, Communication & Technology joint Master’s programme takes place between the Norwegian University of Science and Technology and the University of Oslo. In this post we will take a look at some of the brilliant projects which have come from the Interactive Music Systems module which is taught using Bela and the Trill sensors.

SamTar by Aleksander Tidemann

Aleksander’s project was a prototype of an augmented electric guitar (SamTar) for playing and exploring sample-based music. He wanted to interact and playback samples through a guitar interface to hopefully generate some new perspectives on the sampler and even to create a few novel affordances.

The system uses a Bela, Pure data and 5 sensors all mounted on the face of an electric guitar.

Design

To trigger samples on the SamTar you hit the one string that the instrument has. The hits trigger samples and also adjust the amplitude of the samples which are played back. The pitch of the samples is controlled via sensors on the neck of the guitar.

Custom cape designed for the SamTar.

Choosing different samples with one button

To categorise and organise samples, Aleksander created a 2D sample-space in Pure Data to navigate various sample banks. The sample space is explored via a single button mounted on the guitar which skips to different segments and scenes when pressed.

The p5.js GUI showing position in the sample space.

In this prototype a GUI in p5.js was also used to visually display the “location” of the player in the current sample-space.

For more info on the implementation see this post.

The Dolphin Drum by Simon Sandvik

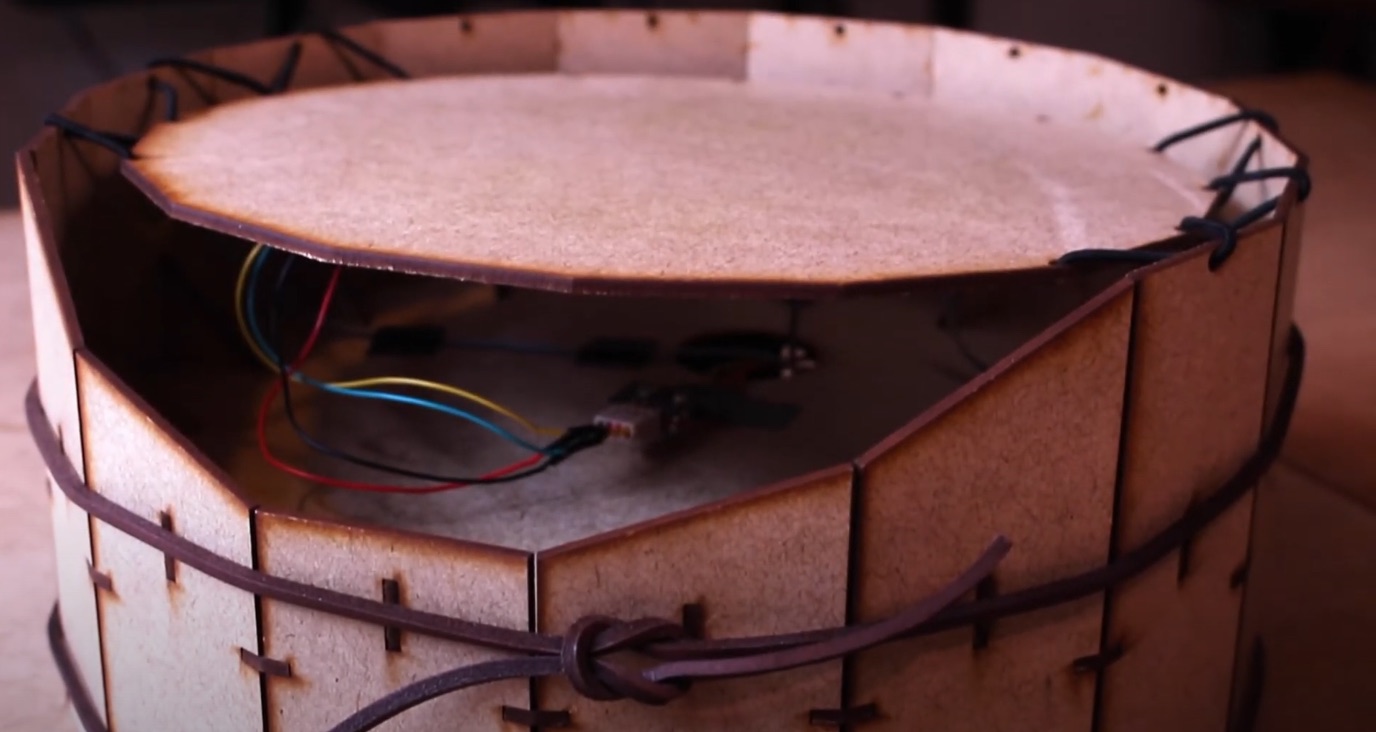

Simon project turns a vase into a percussive tactile instrument which uses granular synthesis. It is a hollow vase with different textures on its surface making it ideal for a wide range of sound generation.

The vase has a speaker embedded inside so all the output acoustically feeds back into the system, picking up acoustic qualities of the vase. A piezo disc is mounted inside the vase which is used as the sound input for the system.

Design

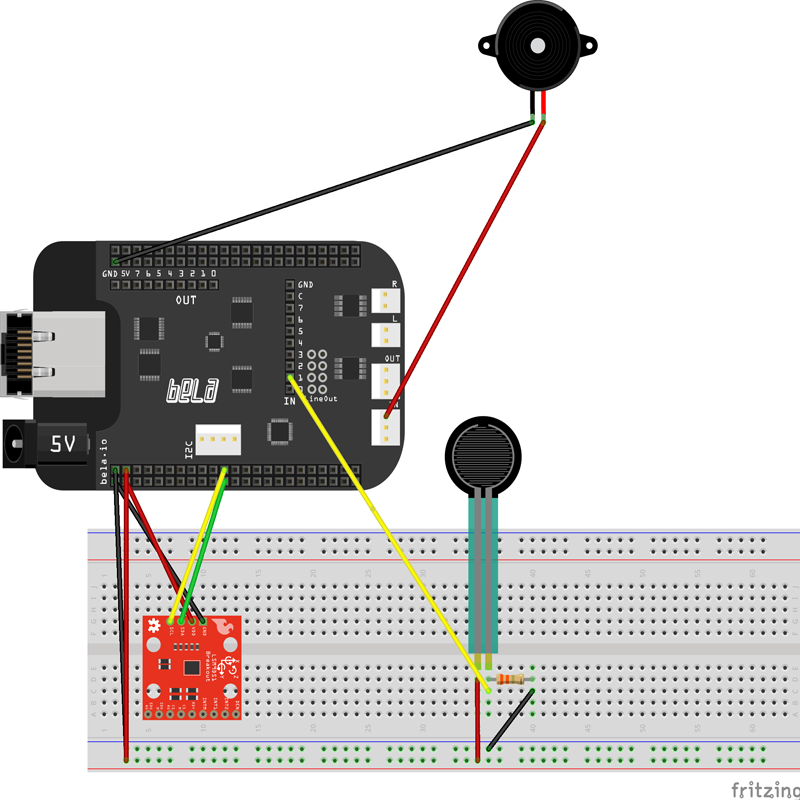

In addition to the vase which is the main part of the instrument, there is a 9DF accelerometer and a force sensitive resistor. By turning the accelerometer on three axes you can interact with multiple parameters of the granular synthesis: windowsize, pitch, and delay. The force sensor is mapped to control feedback in the system.

Controlling the Dolphin Drum

With a large amount of delay and feedback in the system, the performer is continuously reacting to the system’s output and exploring the resultant sonic landscape. The dolphin drum is a dynamic toolbox for generating interesting sounds which are heavily reliant on the initial acoustic sound input.

Simon noted that controlling the parameters becomes a balancing act which is not so much about traditional instrument skill but is an exploratory journey which heavily relies on buildups, risers, noise, and percussive sounds.

For more info on the implementation see this post.

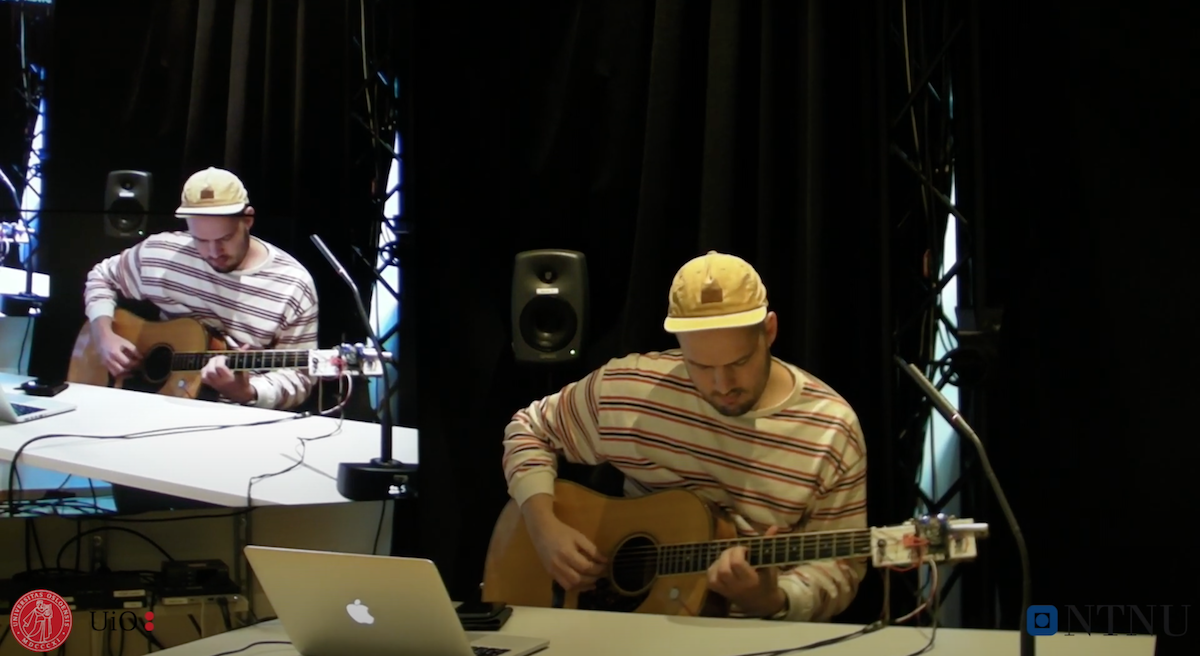

The Hyper Guitar by Thomas Anda

Thomas’s project was a hyper guitar where the acoustic sounds from the instrument blends perfectly with the processed sounds.

Watch a video of the project in action here.

Design

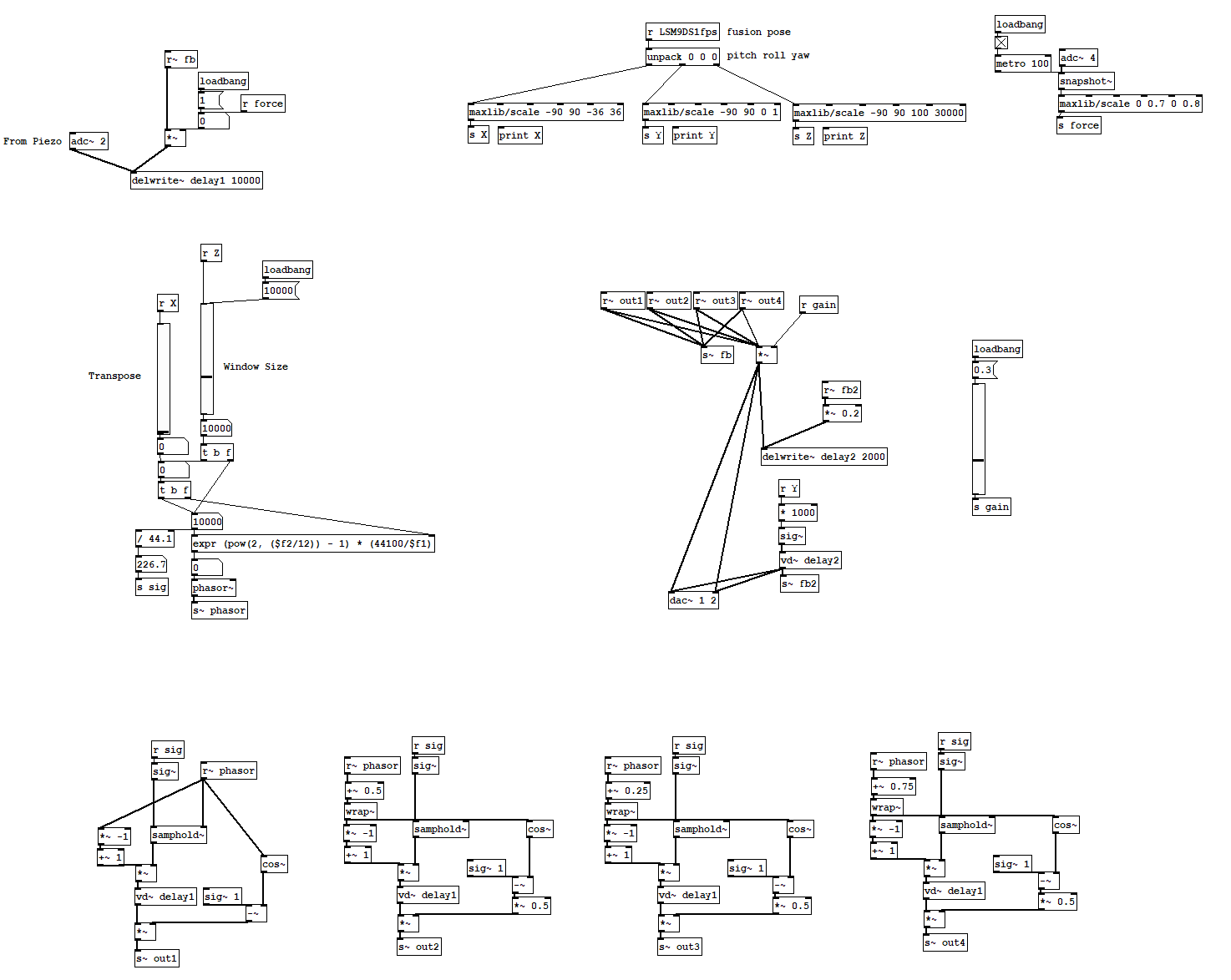

This instrument uses a 9 DOF IMU LSM9DS1 from SparkFun. This is an IMU which houses a 3-axis accelerometer, a 3-axis gyroscope and a 3-axis magnetometer and so gives you nine degrees of freedom (9DOF). If you analyse the output from the three sensors and fuse them together you can then calculate the roll, pitch and yaw.

Thomas mapped the pitch and the roll of the 9DOF to different parameters of a spectral delay and a granular synthesis-patch. All of the audio-programming is done in Pure Data. In the GIF below you can see how the pitch is mapped to the bin-position of the spectral delay.

Thomas had the following to say about his project:

…the main issue when creating an interactive music system where you involve a guitar, is that you don’t have much spare bandwidth. Both of your hands are involved in the sound creation and letting go of the strings will stop the musical output. Therefore a motion sensor fits perfectly in this context.

For more info on the implementation see this post.

Musings with Bela by Jackson Goode

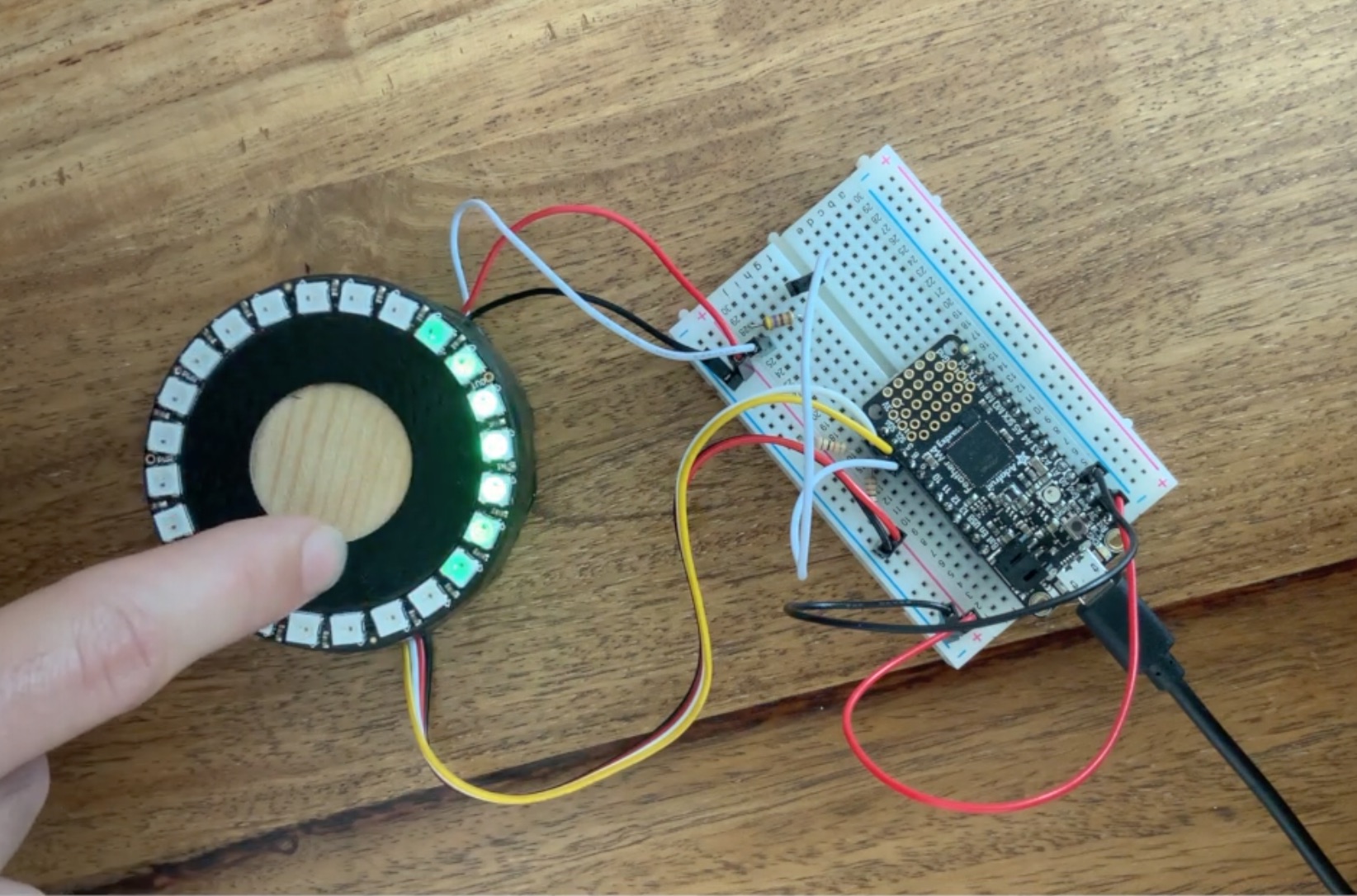

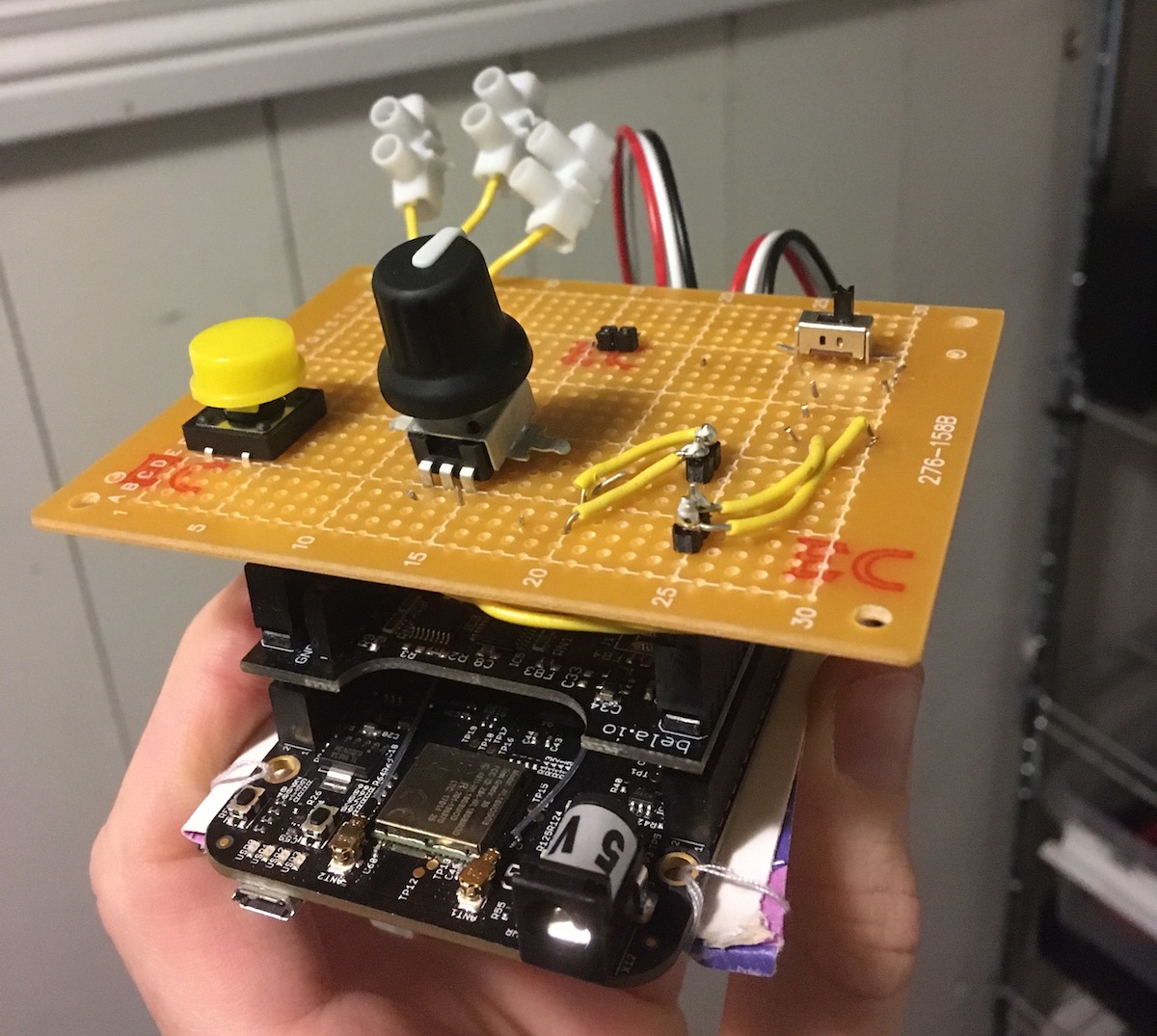

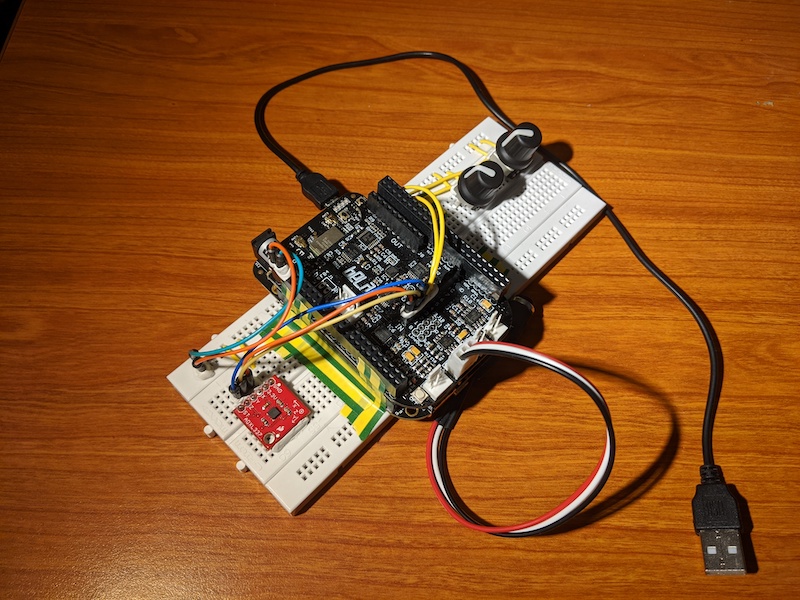

For Jackson’s project he did an admirable job of bringing together a portable EEG reader and Bela, coupled with an accelerometer and potentiometers.

Watch a video of the project in action here.

Design

This project incorporates a Muse portable EEG headband with 4 electrodes recording at 256Hz which was communicated with via Bluetooth. This data was streamed to the Bela which also had a couple of potentiometers and an accelerometer attached.

At the core of the system was a method for interpolating between short audio grains. Audio files are read into an array and these arrays are then interpolated using the external iemmatrix.

The two physical pots control an oscillator which reads from the resultant table, they serve as tuners for the synth whose timbre can explored by rotating the whole breadboard. The EEG stream modulates the amplitude of the read table so that, in theory, a wandering, active mind would lead to a distorted synth.

For lots more information on the implementation see Jackson’s post.

MTC Masters

The Music, Communication & Technology (MCT) joint Master’s programme takes place between the Norwegian University of Science and Technology and University of Oslo. These brilliant projects come from the Interactive Music Systems module where the focus is on building interactive systems and instruments using Bela and Trill amongst other components. For further information you can also check out their blog.